Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

Accelerating Scikit-Image API with cuCIM: n-Dimensional Image Processing and I/O on GPUs | NVIDIA Technical Blog

Should Sklearn add new gpu-version for tuning parameters faster in the future? · scikit-learn scikit-learn · Discussion #19185 · GitHub

Tensors are all you need. Speed up Inference of your scikit-learn… | by Parul Pandey | Towards Data Science

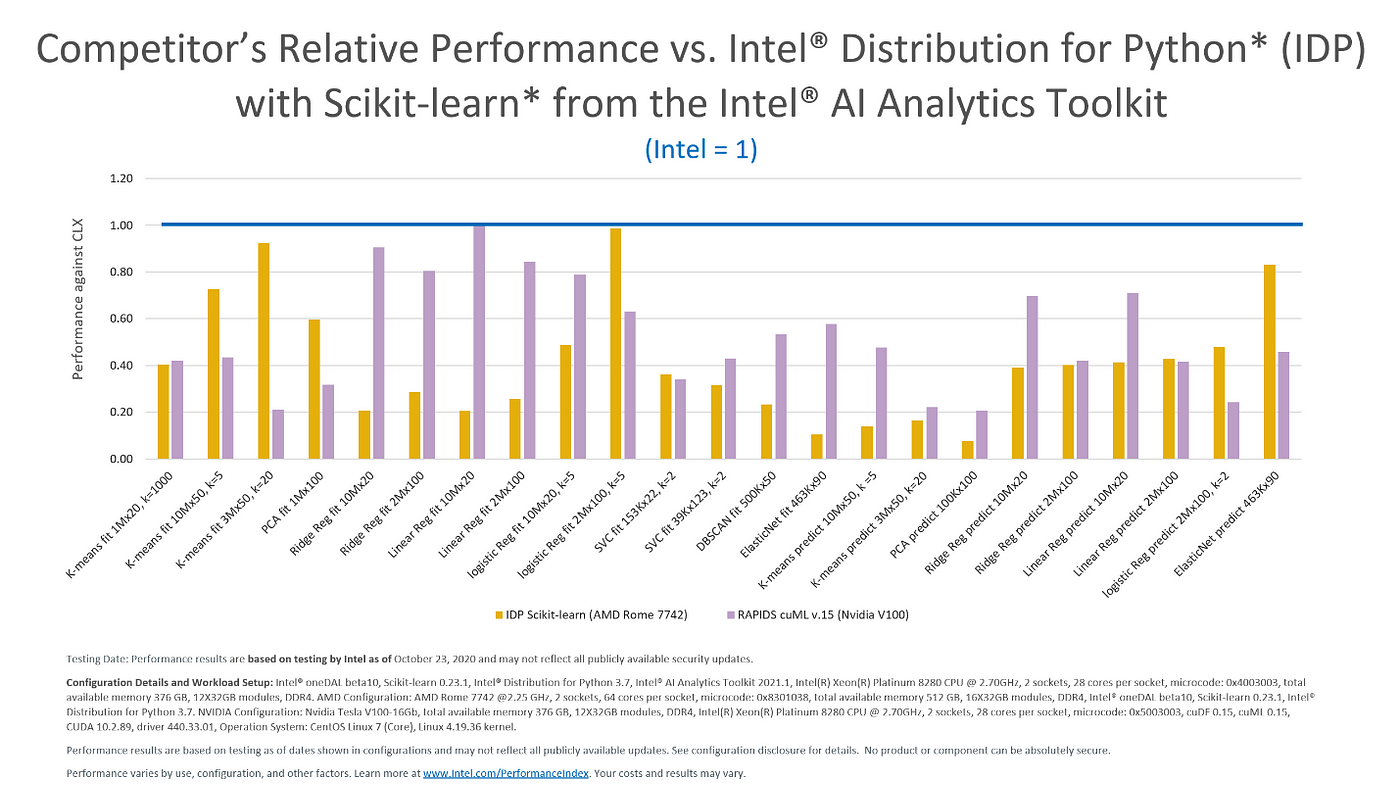

Intel Gives Scikit-Learn the Performance Boost Data Scientists Need | by Rachel Oberman | Intel Analytics Software | Medium

The Scikit-Learn Allows for Custom Estimators to Run on CPUs, GPUs and Multiple GPUs - Data Science of the Day - NVIDIA Developer Forums

GPU Acceleration, Rapid Releases, and Biomedical Examples for scikit-image - Chan Zuckerberg Initiative